Web3 Infra Series | Building the Data Layer for a Composable Internet

Published on Jun 23, 2025

Every single swipe, purchase, click, and comment is data, and it’s

considered the raw material of the digital world.

Every piece of that data in today’s modern internet is owned, stored,

and monetized by someone else. The data economy has already begun, but

most of the value generated from it is extracted by platforms, rather

than the actual users.

Social media platforms track your engagement, e-commerce sites store

your buying behavior, and loyalty apps lock in your preferences. Each

company builds a moat around your activity, treating your actions as

proprietary insight, so what you do on one platform is stuck there,

with no continuity when you interact elsewhere.

Your reputation, history, and context don’t follow you, even though

you’re actually the source of it all. This reveals a much deeper

structural issue.

Data has become one of the most important resources in the online

world, but the people generating it have very little visibility or

leverage over how it’s used. Web3 infrastructure challenges this

imbalance by designing systems that provide control, mobility, and

benefits to the end-users.

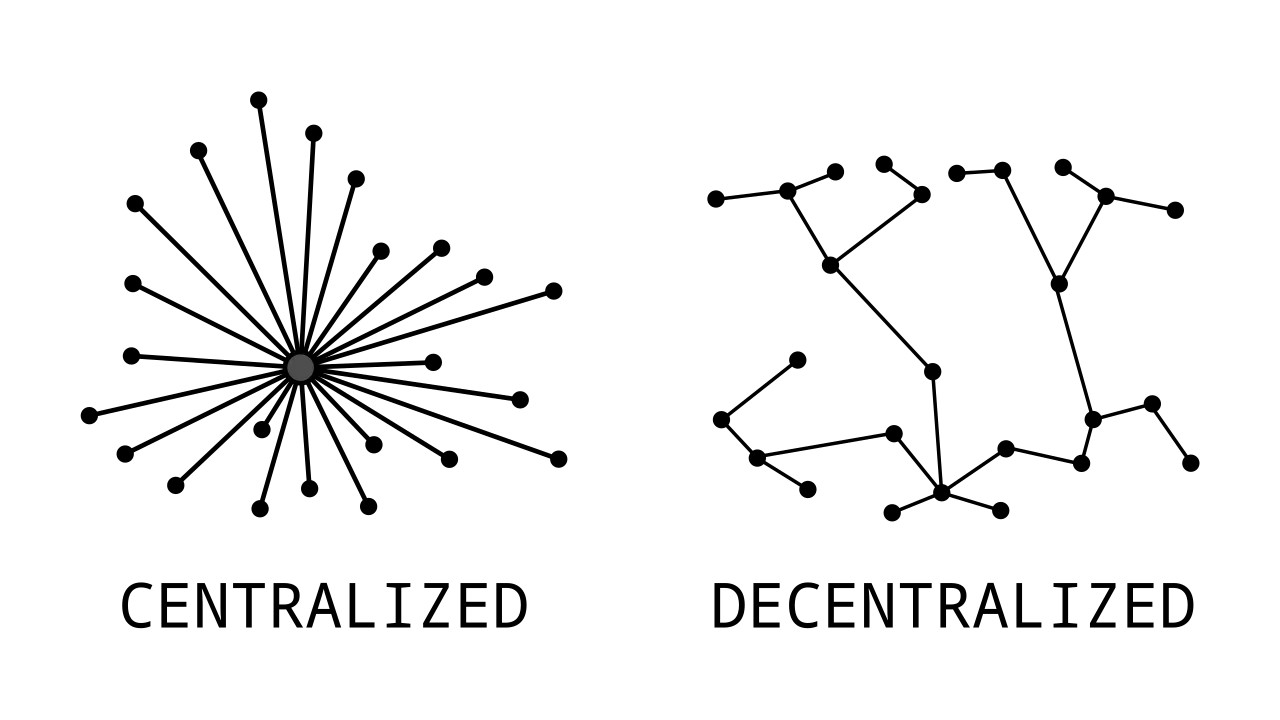

Interactions are only going to become more distributed across

applications, protocols, and the digital world, and continuity and

interoperability are going to become essential pieces of the puzzle.

Without portable data, digital ecosystems become broken and difficult

to navigate, or overly dependent on intermediaries.

In essence, the ability to carry your context with you should be a

foundational part of how the internet operates, and in this article,

we explore how Web3 infrastructure rethinks the data layer, moving

control away from platforms to people, and building the foundation for

a truly composable, portable internet.

A functioning data economy begins with the principle that users should

benefit from what they create.

This means deciding who can access their data, in what format, under

what conditions, and for how long. The design of the system needs to

be flexible enough to allow for nuance and specificity, which reflects

the wide range of data types and usage scenarios.

Rather than blindly assuming that all data belongs on a public

blockchain, the focus should be on how to structure and share data

safely. Privacy, consent, and verification need to work together. In

Web3, these qualities are implemented through decentralized identity

systems and verifiable credentials. Privacy-preserving proofs like

zero-knowledge techniques can also strengthen this model, allowing

users to verify facts without revealing sensitive data.

Credentials are structured attestations that record interactions,

purchases, memberships, attendance, and more. They operate as

controlled disclosures, tied to a persistent identity that is managed

by the user in a sovereign way. Credentials are able to carry embedded

logic, allowing them to expire, update, or adjust based on evolving

conditions, enabling a model where the user is able to bring their

data with them in a permissioned, contextual format.

This design creates a completely different approach to online trust.

Platforms no longer need to accumulate data directly, and users don’t

need to over-disclose. With verifiable credentials, trust is

externalized, and services can respond to real signals without

becoming data custodians themselves. Developers also gain the ability

to design applications that respond to proofs rather than profiles,

and product logic can trigger based on possession of a credential

rather than the presence of a user account.

What happens is this model reduces backend complexity, increases

composability, and shifts power back to the individual.

Where it belongs.

In traditional models, platforms act as both data collectors and

gatekeepers. The data users generate is stored in proprietary systems,

inaccessible from the outside and unusable elsewhere, which creates a

lot of friction, redundancy, and vendor lock-in.

When data becomes portable, that dynamic suddenly shifts, and

credentials give users the ability to carry their history,

permissions, and status across different ecosystems. Imagine a seller

with years of positive reviews on one platform being able to present

that reputation in a completely different context. The seller benefits

from continuity, and the new platform benefits from receiving

verifiable, low-risk onboarding.

This pattern holds across many roles, everything from contributors,

moderators, DAO members, learners, and creators that can build

reputations over time. These reputations have real value, and

credentials offer a standard way to express that value without forcing

re-verification or duplicate effort.

Uptick’s credential and DID stack is designed to support this flow.

Credentials are able to be issued in response to meaningful activity,

with the ability to be signed by trusted sources, and remain under

user control. However, it should be noted that trust in credentials

stems from their issuers, whether it’s a university, DAO, protocol, or

community registry, the value of a credential depends on the

reputation of the source.

Verifiable credentials work because they embed these trust anchors

directly, and systems like Uptick’s are being built so we can

integrate known registries or decentralized attestation frameworks to

provide clarity around who is authorized to issue which types of

credentials. Verification can happen anywhere, even without a direct

relationship between the issuer and the verifier. What we end up with

is a system where platforms don’t need to recreate identity or history

every time, and they can instead recognize it, validate it, and act

accordingly.

This improves the user experience, and also unlocks entirely new

composability patterns across applications.

As more and more applications start to implement verifiable

credentials, the ability to issue and validate them at scale depends

on having reliable access to on-chain and cross-chain data.

Developer-facing tools that support decentralized credential workflows

can streamline this process, allowing systems to verify real-world

user activity, digital asset ownership, or participation history

without depending on centralized APIs.

Access isn’t static, because it depends on roles, context, conditions,

and time. Credentials enable dynamic access logic, removing the need

for brittle permission systems tied to accounts and databases. Instead

of asking users to share sensitive personal details, services can

request proof. Age, residency, membership, purchase history, these are

all verifiable without revealing anything more than necessary.

This is the way to improve both privacy and security.

Using Uptick’s Vouch system as an example, credentials can be issued

directly to users and presented wherever needed. QR codes, links, and

in-app flows make the credential exchange process smooth, and expiry

and revocation conditions mean that outdated credentials no longer

grant access, which prevents abuse and reduces administrative stress.

This kind of programmable access also opens up a wide range of new

capabilities. Events could issue credentials to attendees that unlock

future perks, governance systems could weight votes based on verified

participation, or product trials could be limited to users with a

history of engagement, providing access that is targeted and fair.

All of this is supported without traditional gatekeeping, where

credentials can replace hard-coded logic with flexible, externalized

conditions. With this in place, services become much more adaptive,

and users gain the ability to shape their own access experiences.

The infrastructure supporting these credentials also needs to provide

consistent access to data across ecosystems. Services that can

integrate both real-time activity and historical records allow value

chains to be much more precise, particularly when it comes to

evaluating past behavior, transaction histories, or engagement

patterns.

Accessing that data in a decentralized way helps make sure that it

remains verifiable, tamper-resistant, and portable between different

services.

Where real-time visibility into digital asset activity matters, such

as tracking ownership, evaluating interactions, or managing

credentials, value chains rely on context, and sellers want to know

their buyers, creators want to understand their audiences, and

communities want to reward participation.

All of this requires information, but it doesn’t require

centralization.

When credentials carry that context, services can make decisions

without direct access to raw data. A seller doesn’t need a full

profile to offer a loyalty discount, only proof of purchase history,

and a DAO doesn’t really need wallet history to grant access to a

proposal, only confirmation that the user has contributed meaningfully

in the past.

Essentially, this model reconfigures data flow across verticals.

In commerce, retail partners can coordinate rewards by accepting

shared loyalty credentials, in media, creators can recognize verified

supporters regardless of platform, and in community spaces,

contributors can carry recognition that translates into access,

responsibility, or compensation. Rather than building data pipelines

between every service, credentials allow ecosystems to communicate

through proof. This is more scalable and more secure, as data stays

with the user, and services act only on what is presented and

verified.

This new structure gives rise to layered systems that are modular by

design, with each platform or product participating in a broader

ecosystem by contributing to and recognizing credentials, reshaping

the definition of a value chain, and extending it across services and

communities.

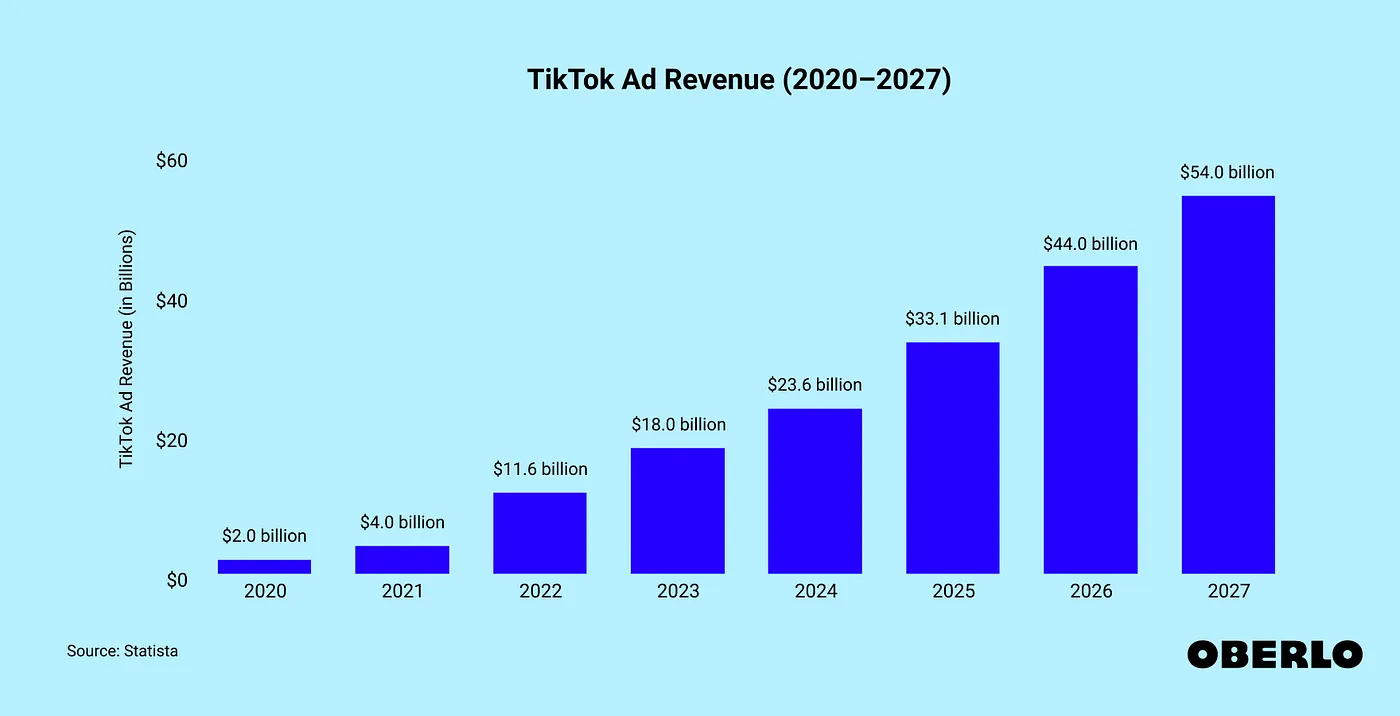

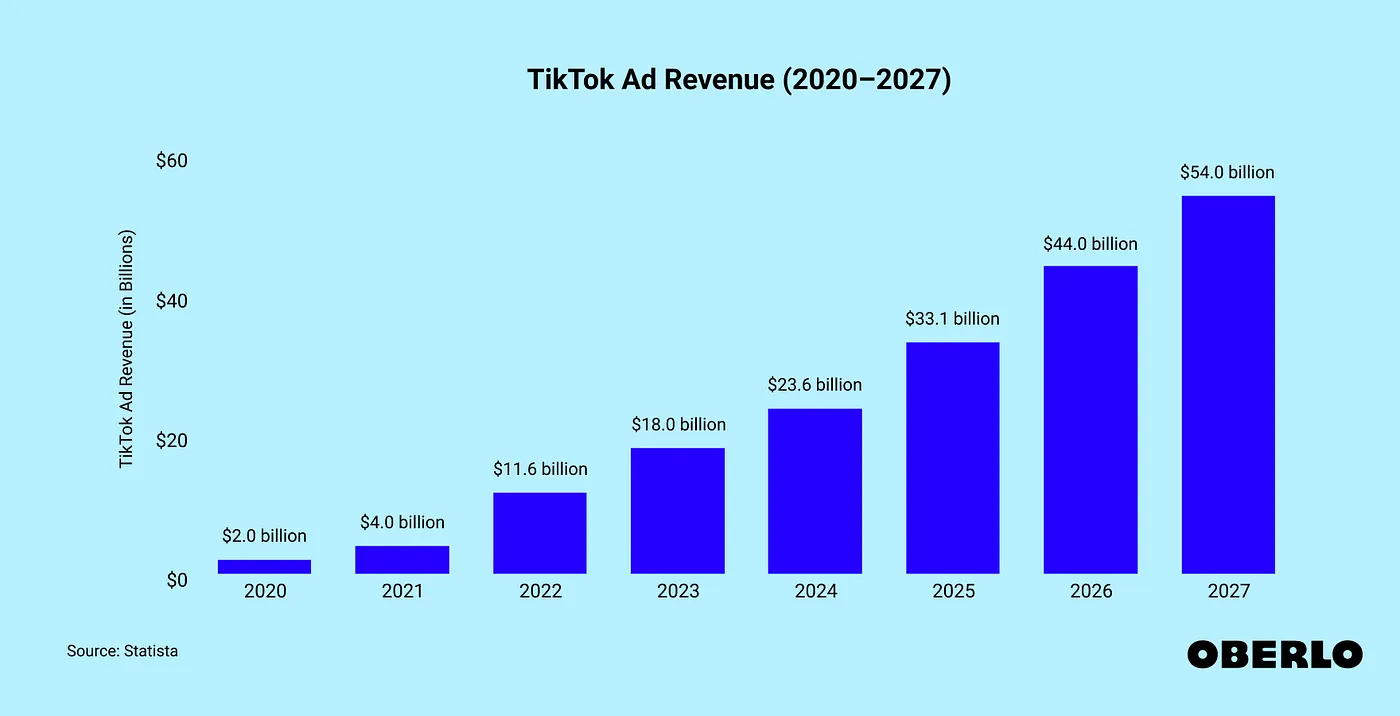

Targeted advertising has historically functioned by harvesting

behavior and predicting intent. Most of this activity happens in the

background, with very little transparency and no compensation, and

users are reduced to data points and audiences.

The Web3 data economy introduces a very different model where users

can represent themselves with purpose-built credentials. Instead of

being profiled, they present verifiable traits, and instead of being

monetized passively, they can engage in consent-based exchanges.

A credential might state that someone regularly attends music events

or frequently purchases independent fashion, and these signals are

extremely useful to brands and advertisers, but the user defines when

and how that information is revealed.

This approach has broader implications, because communities can

aggregate their data into collective pools, negotiating from a

position of strength, and creators can monetize audiences without

relying entirely on sponsorships or algorithms.

Data then becomes a currency backed by consent, rather than a

commodity extracted without permission.

As these patterns start to take shape, we are seeing a need for shared

infrastructure. Applications, services, and communities require a

common foundation to issue, verify, and accept credentials, and this

is what the decentralized data layer provides.

This structure encourages composability, as applications can build on

each other’s proofs. Protocols can reference shared standards, and

users can move freely, presenting only what they choose, with the

assurance that services will understand and respond appropriately.

This also unlocks interoperability across verticals, so a gaming proof

might provide early access to a ticketed event, or a contributor

credential might unlock a beta in a different ecosystem. Value follows

the user, shaped by context, and recognized by services that operate

with shared logic.

The decentralized data layer turns half-broken experiences into

connected ones, without collapsing them into uniformity. Each app or

product retains its identity, but interacts with others through a

shared grammar of verifiable signals.

To support this, credential systems need to rely on accurate,

verifiable data across ecosystems. Uptick Data Service (UDS) provides

that foundation, with real-time and historical data access across

chains, delivered through a decentralized framework built for

developers. This completes the stack needed to move from isolated

interactions to composable, verifiable data flows.

To make credential systems functional, data can’t just be portable, it

needs to also be queryable in context. Indexing plays a big role here,

as developers need reliable ways to surface historical credentials and

match logic to real-world state. This is where data services like UDS

can go way beyond simple storage, offering structured, queryable

endpoints that make verifiable data usable in live applications.

The final piece of the data puzzle is movement.

Data needs to be able to travel with the user, respond to context, and

trigger outcomes without becoming a burden or a risk. Ownership

without portability is static, and what matters is how data flows, how

it adapts to new environments, and how it supports the user at each

stage.

Credentials offer a structured way for this movement to take place,

giving data purpose and relevance, and the future of the digital world

depends on this shift. Over time, lock-in will become less viable, and

static profiles will feel increasingly outdated, but what will matter

is how easily data can move between ecosystems, how much control users

have over it, and how many services are willing to recognize it.

A composable data layer gives users leverage, makes applications more

adaptive, and builds trust into the flow of information. The next

generation of the internet needs movement, consent, and control, all

built on composable infrastructure.

The Web3 data economy is already starting to show signs of life, but

the systems that truly embrace movement, consent, and modular design

will shape what comes next for the decentralized data layer.